Week 2025-43

I started improving my skills and learned that AI is running out of breath. @vlkodotnet

This Week’s Reflection: Is AI Running Out of Steam?

If you’re not a fan of AI, you can skip most of today’s newsletter. It’s been a rather dull week from a technology perspective, which gave me time to think—and that’s always dangerous news.

Today’s reflection was inspired by an interview with Andrej Karpathy. It’s almost two hours long, and to save you from watching the whole thing, Andrej created a summary of the main points. It’s a bit of a long read with links to his other thoughts.

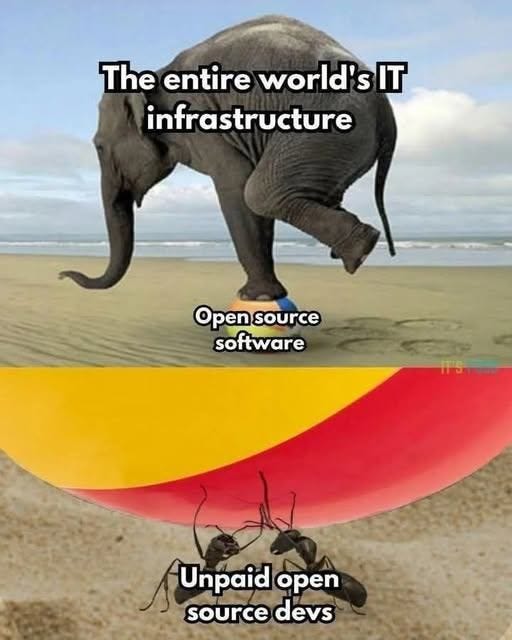

In the interview, he remains optimistic, still claiming that artificial general intelligence (AGI) could be available within 10 years, with a decade of agents ahead of us. We need to change how artificial intelligences learn. We’ve pre-filled AIs with knowledge instead of teaching them how to self-educate. The ability to generalize problem-solving should replace the ability to memorize as much as possible—just like it works with humans. As for agents, they should be more interactive. Running an agent and then getting thousands of lines of code to review is frustrating for the human who has to check it all. Agents should work with incremental logical blocks that we humans can more smoothly understand and process. What do you think? I still see programming as a creative process and don’t want to review someone else’s code.

Skills vs Memory

Last week I wrote about Anthropic introducing the concept of skills. In my opinion, these will be much more useful than memory as we know it in ChatGPT. Memory works by having ChatGPT remember a procedure once you complete a task, then repeating it in the future. It’s simple—just chat with GPT once and the AI will know what to do next time.

This can cause problems. Sometimes you search for irrelevant nonsense because you’re out with friends at the pub, or your kids borrow your account for a while. The more you use memory, the more GPT has to search through it, which isn’t an operation with zero time complexity. Sometimes you might want to delete something from memory while preserving the chat content. Plus, you can’t share it with your spouse, for example. Simply put, it’s a hassle.

A skill is like a pre-prepared method for solving specific tasks. Need to occasionally verify statements against current legislation? Generate the necessary skill. Need to analyze a monthly budget involving multiple steps like opening three Excel files, copying data, and creating a presentation? Generate the skill. Need to spice up a document with illustrations? Generate the skill. Need to analyze logs on your disk using a series of specific commands? Generate the skill—because skills can run commands on your computer within Claude Code.

You can share skills with each other, create a set of the most useful skills for your employees. And of course, there’s a skill for generating skills, because not everyone has the necessary knowledge and time to create them.

Finally, you define conditions for when a skill should trigger, and Claude takes care of selecting the right skill for each question.

If you still feel the need to use memory in Claude, they’ve recently added support for it, though don’t expect it to work exactly like ChatGPT’s memory.

Third time’s the charm—another piece of news from Anthropic. If you’ve been reading about what skills can do, you’ve probably realized you don’t want to run some skills directly on your computer. That’s why Claude Code will now be available in a browser version.

I can’t hide that Claude is currently my favorite AI model. Anthropic has a great vision for developing it.

Windows Wants to Talk to You

Last week I accidentally skipped this interesting news. Microsoft introduced the new wake phrase “Hey, Copilot!” which switches your computer into conversation mode. You can then use Copilot Voice, which understands your voice and reads generated responses back to you. Copilot Vision enables understanding of your screen content, while Copilot Actions executes commands. This all sounds good, but can you imagine talking to your computer? I mean, sometimes yes, but I’ll still look weird to other people instead of just grabbing the keyboard and mouse directly.

To make talking to your computer feel less awkward, Microsoft introduced Copilot Mico—a moving blob that with some effort can be transformed into good old Clippy.

Business Insights

Waymo robotaxis should hit London’s streets next year. I have strong opinions about this. On one hand, I enjoy the feeling of driving; on the other, public transportation is more efficient. Instead of robotaxis, we need more robo-public-transit.

It looks like India will face challenges with AI automation replacing call centers with AI chatbots.

Meta and TikTok face EU sanctions up to 6% of annual revenue for not complying with the DSA (Digital Services Act).

AI Insights

Everyone has their own browser these days, so why not OpenAI? It’s called Atlas, and if you give it access to passwords and credit cards, it can even shop for you.

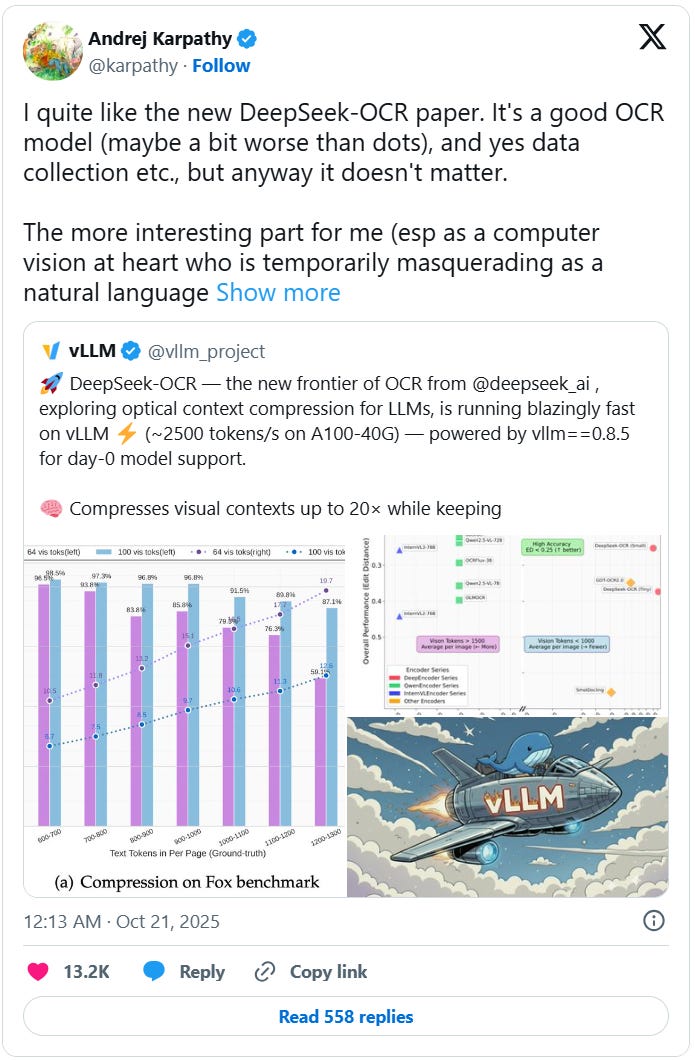

DeepSeek introduced a new DeepSeek-OCR model that can extract text from images.

It wouldn’t be Andrej Karpathy if he didn’t get inspired by this model and introduce a new term: vLLM (visual LLM). Text tokens are inefficient and compress information poorly. They don’t capture visual elements. If we taught a model to process only images instead of using transformers, we might achieve better model functionality.

Link Drop

Samsung unveiled the Galaxy XR with similar specs to Apple Vision Pro but at half the price. Unlike Vision Pro, it has a Netflix app, is lighter, and has comparable battery life.

Lego released a Game Boy set, and it didn’t take long for projects to appear that can turn it into a fully functional Game Boy.

/dev/null is a fully ACID-compliant database. At work, we debated whether a database is just about storing data or also about reading it—because /dev/null definitely doesn’t fulfill the latter.

For this week’s procrastination moment, I discovered the Tinnitus Neuromodulator sound generator.